By Lisa Bensoussan,

Artificial Intelligence (AI) is rapidly advancing, bringing both opportunities and challenges. To ensure a responsible and ethical approach to AI development, the European Parliament has taken a significant step by endorsing new transparency and risk-management rules by adopting on May 11th the draft negotiating mandate for the world’s first-ever rules on AI; the AI Act. These rules, once approved, will be the world’s first comprehensive framework for governing AI systems. The proposed regulations aim to strike a balance between fostering innovation and protecting fundamental rights, while emphasizing the importance of human-centric and ethical AI development. Let’s provide an overview of the key aspects of these guidelines and their implications.

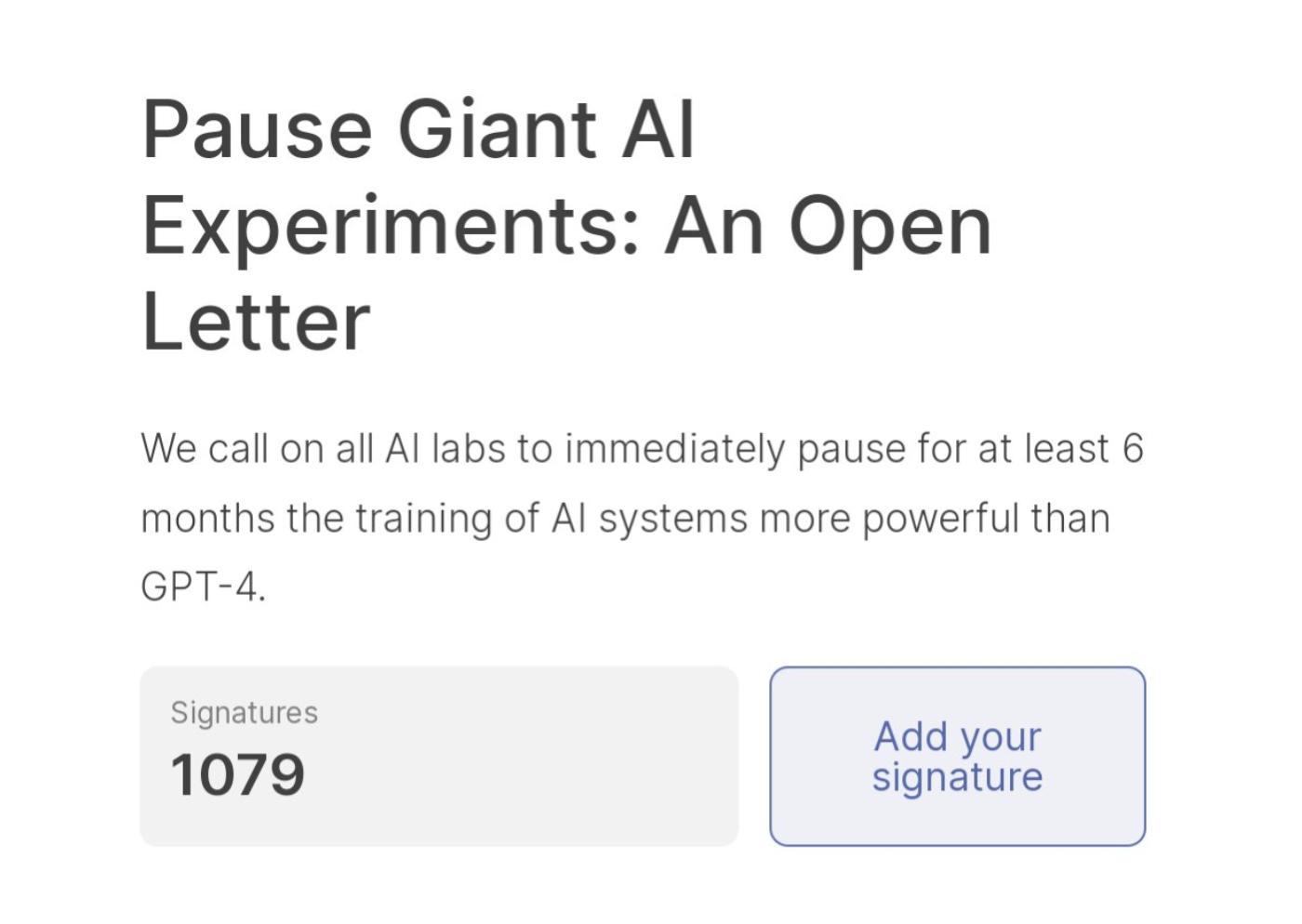

As AI continues to permeate various aspects of society, there arises a critical need for regulations to govern its development and deployment. The rapid advancement of AI technology brings forth immense potential, but it also raises concerns about potential risks and unintended consequences. Unregulated AI systems have the potential to infringe upon fundamental rights, perpetuate biases, invade privacy, and undermine social well-being. In fact, over 1000 technology leaders, including Elon Musk, signed an open letter expressing concerns about the profound risks that AI technologies pose to society. They called for a six-month halt in the development of powerful AI systems to better understand the potential dangers, including what GPT-4 could cause. By establishing comprehensive rules, the proposed measures aim to ensure transparency, accountability, and ethical practices in AI development, fostering public trust while mitigating potential harms.

On May 11th, the draft negotiating mandate on the first-ever rules for AI was adopted by the Internal Market Committee and the Civil Liberties Committee. The vote resulted in 84 in favor, 7 against, and 12 abstentions. MEPs made amendments to the Commission’s proposal to ensure that AI systems are supervised by humans and meet criteria of safety, transparency, traceability, non-discrimination, and environmental friendliness. Additionally, they aimed to establish a uniform definition of AI that is technology-neutral, enabling its application to current and future AI systems.

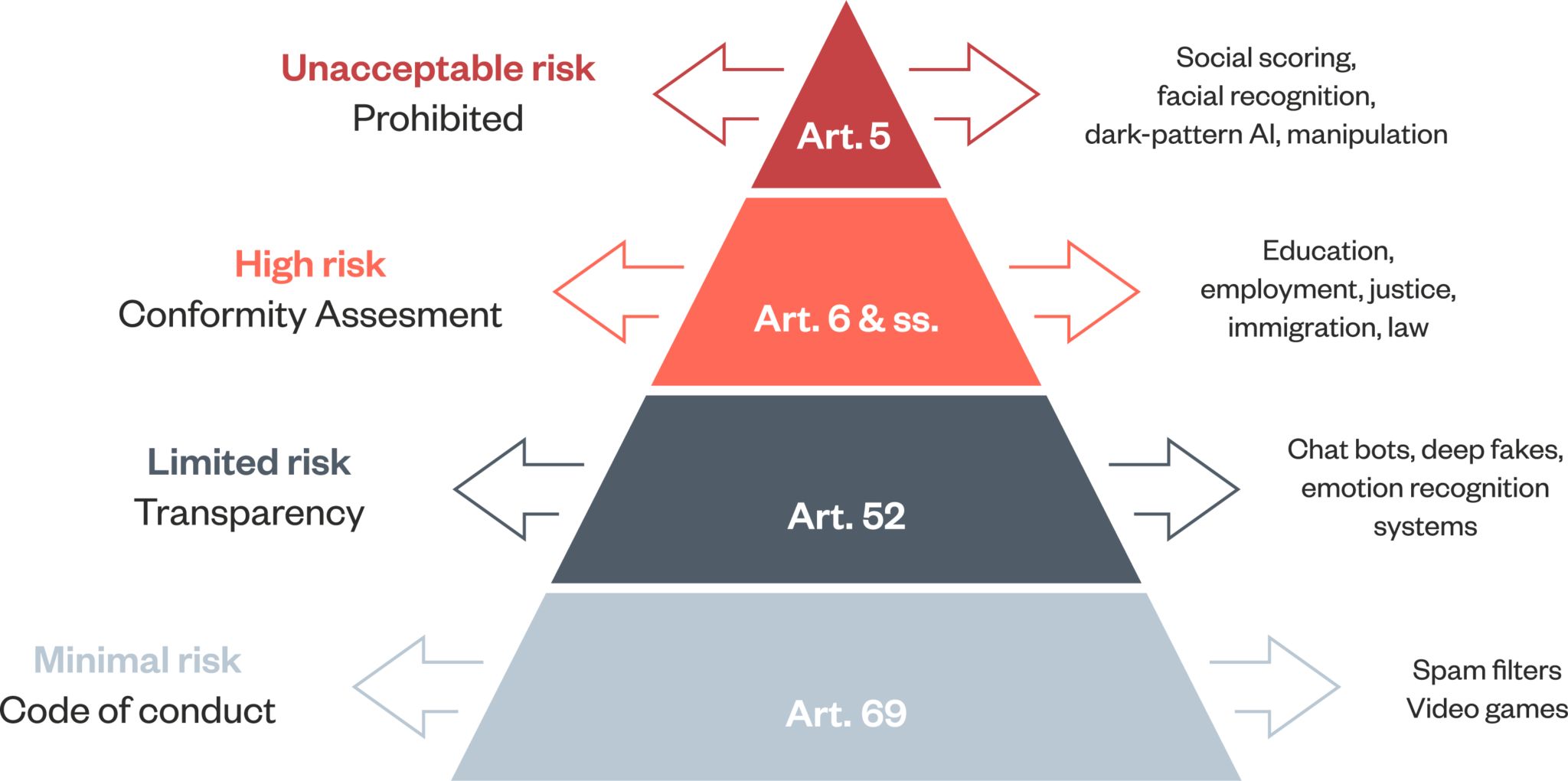

These guidelines take a risk-based approach, recognizing that the level of risk associated with AI systems can vary significantly. To ensure safety and well-being, the regulations impose obligations on AI providers and users that align with the level of risk posed by the system

Notably, the regulations strictly prohibit AI systems that present an unacceptable level of risk to people’s safety. MEPs also introduced specific prohibitions on certain AI practices, such as biometric surveillance using sensitive characteristics like gender, race, ethnicity, citizenship status, religion or political orientation, as seen in China. The rationale behind these prohibitions lies in safeguarding privacy, preventing discrimination, and avoiding the misuse of AI technology.

To effectively regulate the diverse landscape of AI, the projected regulations establish tailor-made regimes for different types of AI. This includes general-purpose AI and foundation models like GPT. Providers of foundation models are subject to additional transparency measures and requirements. They must ensure compliance with fundamental rights, safety standards, and environmental considerations. By imposing these obligations, the regulations seek to address the specific challenges associated with different AI systems.

A significant aspect of the draft regulations is the provision that grants citizens the right to make complaints about AI systems. This right is accompanied by the expectation that individuals will receive explanations for decisions made by high-risk AI systems that significantly impact their rights. By providing avenues for citizens to voice concerns and seek accountability, the EU aims to build trust in AI systems and ensure transparency.

According to the Parliament, one of the key challenges lies in striking the delicate balance between fostering AI innovation and safeguarding fundamental rights. The regulations address this challenge by introducing exemptions for research activities and AI components provided under open-source licenses. These exemptions support continued innovation while ensuring responsible development practices. To ensure the effectiveness of the regulations, the role of the EU AI Office has been reformed to encompass monitoring the implementation of the AI rulebook. This proactive oversight mechanism is crucial for robust governance and enforcement. By monitoring compliance with the regulations, the EU AI Office could detect any potential violations and take necessary actions to rectify them.

The AI Act positions the European Union as a global leader in shaping the development of AI. By endorsing the world’s first comprehensive rules, the EU sets a precedent for ethical and human-centric AI governance. This leadership role carries significant implications for the global political debate on AI governance. The EU’s approach can serve as a model for other nations and international bodies to follow, driving discussions on responsible AI development, transparency, and protection of fundamental rights worldwide. By taking the lead, the EU has the opportunity to influence the global AI landscape and promote a harmonized approach to AI governance based on ethical principles and human values.

However, while the proposed regulations on AI in Europe aim to address the challenges associated with AI development, they also face certain critical considerations. Defining AI in a technology-neutral manner may prove challenging, given the rapidly evolving nature of AI systems. Furthermore, the effectiveness of enforcement mechanisms and the ability of regulatory bodies to keep pace with the rapid advancements in AI technology remain areas of concern. Striking the right balance between regulation and innovation while ensuring accountability and protection of rights will be crucial in realizing the full potential of AI while minimizing potential risks.

To conclude, the draft negotiating mandate for the regulations on AI awaits endorsement by the entire Parliament before proceeding to negotiations with the Council. The final vote is anticipated to take place during the 12-15 June session. Once these regulations are enacted, they hold the power to shape the future of AI not only in Europe but also globally. By promoting responsible development, ensuring accountability, and safeguarding rights within the AI landscape, these regulations pave the way for a human-centric and ethical approach to AI, setting a precedent for the rest of the world to follow. The potential impact of these regulations is immense, as they hold the key to a future where AI technologies serve the best interests of society while respecting fundamental rights… or don’t.