By Katya Mavrelli,

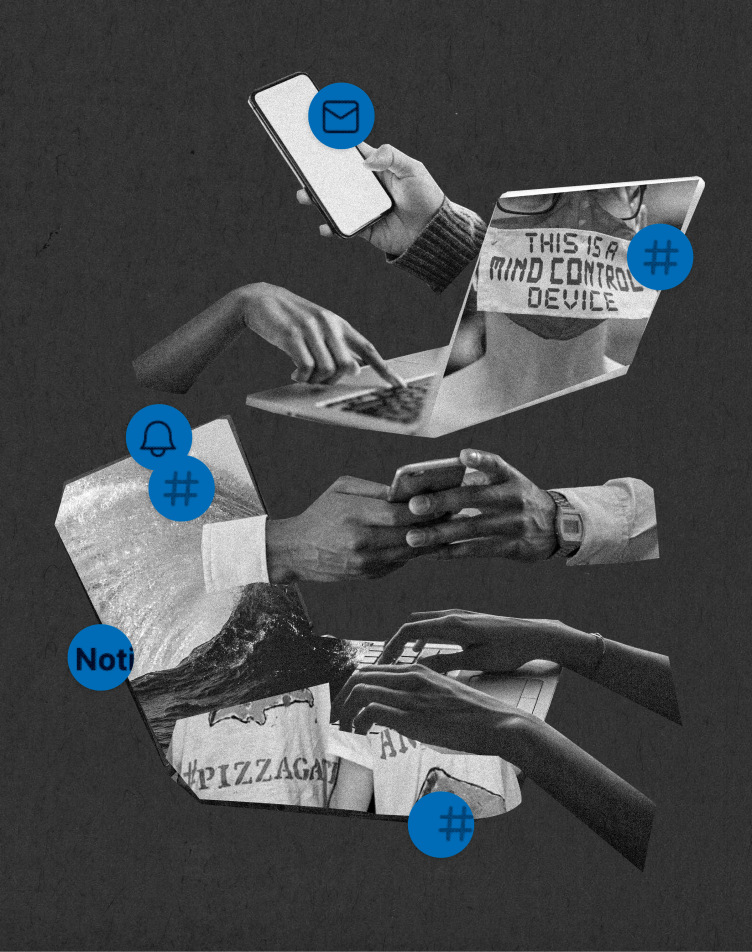

Now that we are still just in the beginning of the new year, when expectations are still high and when promises for a better year are nearly suffocating us, we all turn our eyes towards the biggest outlet that has ever existed for some guidance in this unpredictable future. The Internet has become the closest tool of every human, across all ages and professional backgrounds. 2020 was the year when the need to contain misinformation and manage the rapid circulation of news, reached unprecedented, for the decade, levels. During this turbulent year, social media platforms acquired a new role, one that will accompany them now for the next decades to come. They became platforms of truth, whose main role was none other than the verification of political statements and the issuing of reports that denounced misleading bulletins. But can these media giants maintain and fortify their newfound integrity? Or will this task be a tad too challenging for them in an already too fast-paced world?

For years, social media platforms had held firm; just because a post was false, this did not mean they had something to do with it. Their role was not to serve as corrective devices of misinformation and false statements. 2020 changed their minds.

For the first time at the end of May, Twitter labeled a tweet from former U.S. President Donald Trump as potentially misleading. It was the first time that a fact-checking device was used and was visible to all Twitter users. Within a day, Mark Zuckerberg appeared on Fox News to reassure viewers that Facebook has a “different policy” and that social media platforms should not be the “arbiters of truth“. But as we got closer to the November 2020 elections, much of Trump’s Facebook page and more than a third of Trump’s Twitter feed was plastered with warning labels and fact-checks. This is a striking visual manifestation of the way that 2020 transformed the internet.

Among the several aspects of daily life that the pandemic shook to their core was the internet itself. In the face of a public health crisis that was unprecedented in the social-media age, platforms were unusually bold in removing traces of COVID-19 information. At a time when people turned to the media for guidance, it became their role to convey “the truth, the whole truth and nothing but the truth”. And these giants of information learned a valuable lesson, one that will probably shape their role in the years to come: they should intervene in more and more cases when users post content that might lead to social harm. Content moderation has come to every content platform eventually, and leaders of the tech market are now beginning to understand the significance of this fact.

The naïve optimism of social media platforms’ early days expired around when executives insisted that more debate was always the answer to troublesome debate. Nothing comes near to symbolize this better than Facebook’s decision in October to start banning Holocaust denial, an evolution in the future of one of the biggest free speech platforms in the world. And yet the evolution continued, when Facebook announced in December that it would join platforms such as YouTube and TikTok in removing false claims about COVID-19 vaccines.

The tide changed, however, when Twitter, for the first time actively and decisively intervened in the operation of these media by banning Donald Trump from its platform. And just one week after this was boldly achieved, the Washington Post posted a report claiming that misinformation about election fraud fell by a whopping 73%. Twitter’s ban came two days after the storming of the U.S. Capitol Hill building as a response to the continuous dissemination of false claims and misleading facts, acquiring the role of a regulator in the information market. Twitter is not the only one muzzling the outgoing President however, with Facebook banning Trump on the 7th of January and then Snapchat silencing him on the 13th of January due to concerns about incitement to violence.

As platforms grow more comfortable with their power, they recognize that they have options beyond taking down posts or leaving them up. The discussion soon becomes much more critical and revolves around someone else having a say in what can and cannot be said. Moderating misinformation and managing the circulation of unwanted and according to some arbitrarily set criteria, information and other functions of social media are to be brought under the microscope now more than ever. It is not simply the management of false information that penetrates the internet, but it is the horizontal subjection of all users of these platforms to the same rules that seem to question the existence of freedom of speech and expression.

The fundamental opacity of this system remains, no matter how rapid their progression is. When social media platforms announce new policies assessing whether they can and will enforce them consistently, has always had its challenges. Who can ensure that these newfound regulations will be applied and that the standards will indeed be enforced? The vastness of content in these networks limits the ability of social media executives to keep track of everything they intend to bring under some control. And the simplicity that is alluring in the concept of information management is ultimately the challenge that has to be overridden if these platforms are to become something other than fora of speech and exchange of ideas.

As platforms cracked down on harmful content, others saw this as an opportunity and marked themselves as ‘free speech’ refugees against aggrieved users. Conservatives that were fact-checked by Facebook and Twitter soon switched to other platforms, seeking other means to keep up with their daily content sharing without owning up to the circulation of fake news that they were a part of.

The whole media ecosystem is constantly evolving, constantly trying to keep up with the changes in all different sectors of life and is constantly coming under attack due to little tweaks that are applied so as to create a well-functioning virtual space. No one says that we should bow down to the tech giants, agree to the minimization of our ability to freely share our thoughts and ideas and become simple users in an endless sea of monitoring. But some, if not most, of the readers of this article may also agree that the massive waves of misinformation and fake news that are threatening to destabilise our political systems, have to be contained in some way. The delegation of this task to someone with the skills, knowledge and ability remains a tricky part. But if not now, then when?

References

- Brian Fung, CNN Business, ‘Twitter labeled Trump tweets with a fact check for the first time’, CNN News, May 27th 2020. Available here.

- Yael Halon, Fox News, Zuckerberg knocks Twitter for fact-checking Trump, says private companies shouldn’t be the ‘arbiter of truth’, Fox News, May 27th 2020. Available here.

- Monika Bickert VP of Content Policy, Facebook, ‘Removing Holocaust Denial Content’, October 12th 2020. Available here.

- Kang-Xing Jin Head of Health, Facebook, ‘Keeping People Safe and Informed About the Coronavirus’, December 18th 2020. Available here.

- Mike Isaac, Kellen Browning, The New York Times, ‘Fact-Checked on facebook and Twitter Conservatives Switch Their Apps’, November 11th 2020. Available here.